Studies Show?

How often do journalists breathlessly repeat that studies Show?

It also seems to be an almost daily occurrence that this or that is ranked number number one, or dead last. Click here to find out more!

Today anyone who would be wary of a “study” is accused of being against science, and therefore beneath contempt. We all learned the scientific method in grade school of form a hypothesis and conduct an experiment, with a sample group and with a control group, then compare results. Fair enough, but the real world is more complicated.

Potential problems with studies:

Pressure to publish. There is a saying in academia “Publish or Perish.” With all the people getting PhDs the days, it is extremely competitive to get on track to becoming a tenured professor. There is the idea that a professor is only partly there to teach, and are mainly there to do research to advance their subject. Whether that is right or wrong, it links the progression of one’s career with publishing papers.

Insufficient sample sizes or improper samples. After an exam in an intro to psychology course I was taking, it was asked if students could stay and fill out a survey for the study of a psychology grad student. This was a high-pressure college and soon a student asked if they really had to stay—they wanted to leave and study for the next exam. So as people finished the exam, at least half the students left. I think the survey had something to do with respect for authority, but I am not sure as I was one of the ones who walked out. So, here is a study about the half of the students who did not walk out, at a fairly small science college where (no joke) students would show up in the dining hall wearing Star Fleet uniforms. That group was not representative, of anything but itself. I hope the results did not get published.

Another example of this would be phone surveys. Now how many people out there never answer the phone if it is a number they do not recognize? At least half? More? And when do these calls go out? During the day and at night? People at work during the day may not be in a position to answer all such calls. And who is going to stay on the line and do the survey? People with nothing better to do, desperate to talk to another human? How representative is that of a nation as a whole?

Correlation vs causation. That means just because something seems to go along with something else, it does not prove that one thing causes the other. For example, nearly everyone who dies in a hospital has an IV. So do IV’s cause death? Another example, in the early nineties there was a study that said that drivers using standard transmissions go into more accidents than those using automatics. The conclusion was that shifting gears manually was distracting. Maybe. Or perhaps two groups of people were using standards back then: sports car enthusiasts and people driving entry-level inexpensive cars. People with decades of experience, who did not buy cars meant to break the speed limits, drove automatics. So was the stick shift the cause of accidents, or were they simply correlated with new drivers and drivers who liked to drive fast?

Poor statistics. At most, many scientists, social science or hard science, may have had one intro level course in statistics. Then, years later, they are supposed to use statistics to determine the proper sample size for statistics studies, and do the math to determine if the hypothesis they are looking at can not be rejected at a given level of probability. First of all, there is a lot riding on a proper statistical analysis by someone who may not have ever been all that great at statistics. Also, there has to be some threshold of statistical significance, but the current level is usually 5%. Meaning a 5% chance of being wrong. So, in theory, could 1 out of 20 studies be incorrect just on that basis? Surely a reason for studies to be replicated by other researchers before putting much weight behind them.

The file drawer problem Professor Huffnpuff has a grant to research question X. He researches it, and finds something unexpected. It is an answer no one want to hear. So it does not get published, but is instead forgotten about in a file drawer somewhere.

Failure to replicate. It is recognized that studies are only meaningful when other, independent researchers, have conducted an experiment along the same lines and got the same results. Until a study is replicated it should not be given much credence. See articles here and here.

An ounce of findings, and a pound of jumping to conclusions. A potential Non Sequiter (the Latin phrase for “it does not follow”.) Say two groups are shown images, some yellow and some green. Group A prefers color yellow. Group B prefers color green. Martians are green. Therefore the researchers conclude that group B are Martians! Even if the paper says that the researchers are speculating on the potential reason for the findings, the media will state it as scientific fact proven by the study.

Bad models. A model is a function where certain inputs predict certain outputs. Especially in regard to computer systems trying to predict things. As someone who has made many computer models for industrial plants, the smart managers would ask, if old inputs were put into the model, would it have accurately predicted the past? And if not, how far off was it. Anyone saying that their computer models should not be expected to predict past outcomes when fed past data is hiding something.

Too good not to be true. Back to that intro psychology class, one chapter really caught my attention. It was discussing a controversial researcher. It readily admitted that his survey samples were not at all representative of the public, and not statistically significant to boot, but darn it, they really liked the results, so that there research they were going to keep! That is not science but blind faith.

Cherry picking / reverse engineering the results. Is a study constructed in a straight-forward manner? For example, if a study is attempting to determine if cars painted red get more speeding tickets than cars painted gray, the obvious way to do it would be to collect a statistically significant sample of speeding tickets and then match license plates to VIN numbers and determine what color came from the factory. If instead the study was “we did a random phone survey of people in Detroit and asked them what color of cars they saw pulled over the most.” then the study is junk.

A tendancey is to get lots of data points, and try out combination after combination of them until the right answer comes out. Maybe it is comparing violence before and after a law is passed. Obvious approach is to compare the rate before and after state-wide. But maybe that does not tell what people want to hear, so instead they sift and sift and then announce that, well, the law was bad because comparing the rate of violence between cities of population between 55,000 and 67,000 people, during months ending in the letter r, the rate was 0.001517% worse. No one would have sat down, before looking at the data and come up with that criteria.

If it is not an apples to apples study, be wary.

Reverse engineering the result. Lawyers say that one should never ask a question that one does not know the answer to. Does the “study” try to get to the right answer by engineering the question? One infamous study asked Catholic women if they used artificial contraception and came up with a huge percent. In the fine print, however, was the caveat that they only polled the women who were married but did not have children. Whoa! Hold on there. Couples are not supposed to get married in that Church unless they are open to children from the day of marriage until such time circumstances may entail the use of natural family planning. So, besides couples who were naturally infertile, what was the population being studied? Those who rejected Church teaching. So the study was really about what percent of people who rejected Church teachings reject Church teaching. Need a study to figure that one out?

Poor Peer Review. Given all the potential flaws in studies, they are supposed to be vetted by professionals in the field before being published. For one thing, there are the “vanity” journals that will publish anything for a price, so simply that a study was published may not mean much. But what of the refereed journals? There are disturbing trends of people submitting completely bogus papers, and getting published. There are examples of that here, here, and here.

Rankings

There are click-bait headlines all over the web, and in the news, that this or that is ranked top or dead last. There is something about modern man that loves to rank everything in a list by some “scientific” criteria. This idea would have been completely alien to people a hundred years ago.

The problem with them all is, well, who determines the data points to rank, and how are those data points factored in?

Here is an example, the State Gazette wants to rank the three colleges in the state, Podunk Tech, Wassamata U, and Poison Ivy League. Let us say the reporter decides to look at the following:

- student to professor ratio

- the number of books in the library

- the % of students graduating in 4 years

- the % of graduates gainfully employed the year after graduation

- the % of students going to grad school

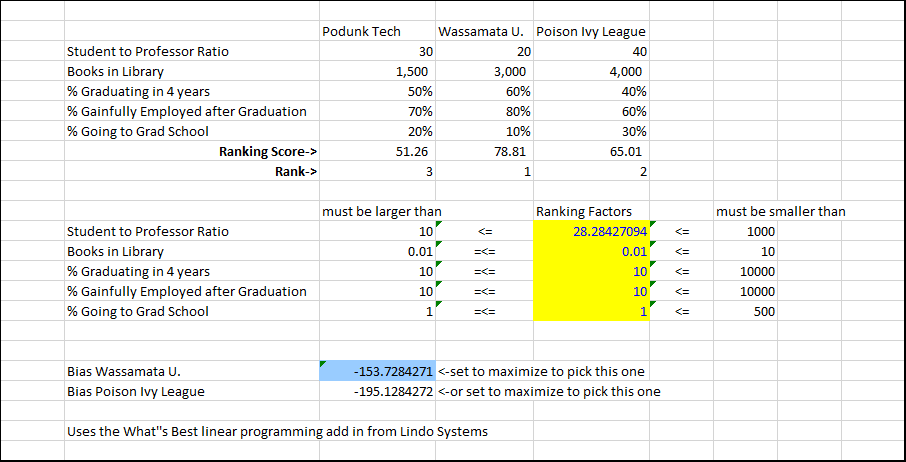

Reasonable enough, sort of. But they can not all just be added together. The smaller the student to professor ratio the better. The larger the number of books in the library the better. So they can not just be added together. Books are in thousands, student to professors are in tens, the others are in percents. So there have to be ranking factors for each variable. The spreadsheet below has ranking factors highlighted in yellow.

Let’s say that the reporter went to Wassamata U and really liked it. If the reporter uses the ranking factors below, then Wassamata U wins.

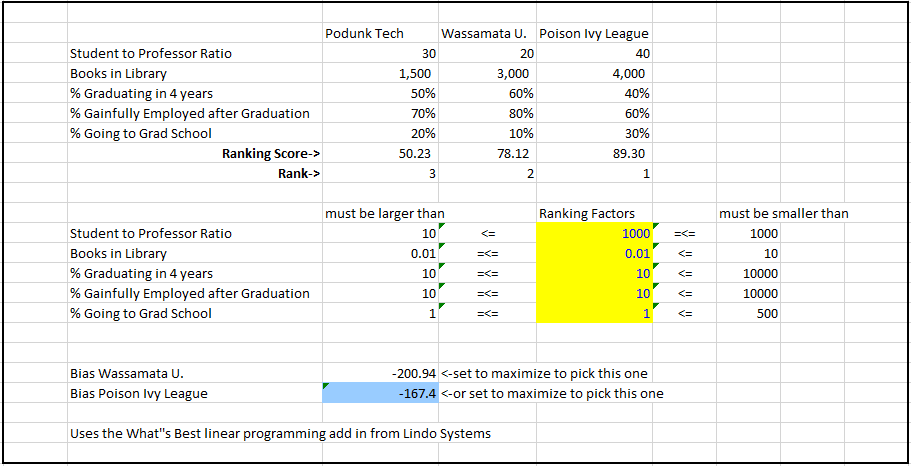

But what if the editor comes along and says that Poison Ivy League had better come out on top. The reporter only has to revise the ranking factors for Poison Ivy League to come out on top.

In this example, to make is easy, a linear programming add-in to Excel was used, so with a few mouse clicks any of the three schools could be made to win.

So the next time there is a report that city X has the worst drivers, or state A has the best medical care, etc., what was the basis for the ranking? And in the silly example above about ranking schools, are quantitative rankings all that meaningful unless they are qualified? For example, are part-time professors counted with the professors? Do the books in the library include a complete set of Reader’s Digest Condensed books that someone donated? Of those employed after graduation, what % are employed in their fields of study?

Sometimes studies and rankings are just clever methods of avoiding common sense.